Project flow#

LaminDB allows tracking data lineage on the entire project level.

Here, we walk through exemplified app uploads, pipelines & notebooks following Schmidt et al., 2022.

A CRISPR screen reading out a phenotypic endpoint on T cells is paired with scRNA-seq to generate insights into IFN-γ production.

These insights get linked back to the original data through the steps taken in the project to provide context for interpretation & future decision making.

More specifically: Why should I care about data flow?

Data flow tracks data sources & transformations to trace biological insights, verify experimental outcomes, meet regulatory standards, increase the robustness of research and optimize the feedback loop of team-wide learning iterations.

While tracking data flow is easier when it’s governed by deterministic pipelines, it becomes hard when it’s governed by interactive human-driven analyses.

LaminDB interfaces workflow mangers for the former and embraces the latter.

Setup#

Init a test instance:

!lamin init --storage ./mydata

Show code cell output

✅ saved: User(uid='DzTjkKse', handle='testuser1', name='Test User1', updated_at=2024-02-17 11:19:33 UTC)

✅ saved: Storage(uid='ZseFAdOD', root='/home/runner/work/lamin-usecases/lamin-usecases/docs/mydata', type='local', updated_at=2024-02-17 11:19:33 UTC, created_by_id=1)

💡 loaded instance: testuser1/mydata

💡 did not register local instance on lamin.ai

Import lamindb:

import lamindb as ln

from IPython.display import Image, display

💡 lamindb instance: testuser1/mydata

Steps#

In the following, we walk through exemplified steps covering different types of transforms (Transform).

Note

The full notebooks are in this repository.

App upload of phenotypic data  #

#

Register data through app upload from wetlab by testuser1:

# This function mimics the upload of artifacts via the UI

# In reality, you simply drag and drop files into the UI

def run_upload_crispra_result_app():

ln.setup.login("testuser1")

transform = ln.Transform(name="Upload GWS CRISPRa result", type="app")

ln.track(transform)

output_path = ln.dev.datasets.schmidt22_crispra_gws_IFNG(ln.settings.storage)

output_file = ln.Artifact(

output_path, description="Raw data of schmidt22 crispra GWS"

)

output_file.save()

run_upload_crispra_result_app()

Show code cell output

💡 saved: Transform(uid='fzNOgdQYemP5bbfz', name='Upload GWS CRISPRa result', type='app', updated_at=2024-02-17 11:19:35 UTC, created_by_id=1)

💡 saved: Run(uid='nW3MBgnRS9k7d54hTbRU', run_at=2024-02-17 11:19:35 UTC, transform_id=1, created_by_id=1)

Hit identification in notebook  #

#

Access, transform & register data in drylab by testuser2:

def run_hit_identification_notebook():

# log in as another user

ln.setup.login("testuser2")

# create a new transform to mimic a new notebook (in reality you just run ln.track() in a notebook)

transform = ln.Transform(name="GWS CRIPSRa analysis", type="notebook")

ln.track(transform)

# access the upload artifact

input_file = ln.Artifact.filter(key="schmidt22-crispra-gws-IFNG.csv").one()

# identify hits

input_df = input_file.load().set_index("id")

output_df = input_df[input_df["pos|fdr"] < 0.01].copy()

# register hits in output artifact

ln.Artifact(output_df, description="hits from schmidt22 crispra GWS").save()

run_hit_identification_notebook()

Show code cell output

💡 saved: Transform(uid='KMXWMNPjyZp8Au7e', name='GWS CRIPSRa analysis', type='notebook', updated_at=2024-02-17 11:19:37 UTC, created_by_id=1)

💡 saved: Run(uid='DYMlIYIkKXTDS6UAFHzQ', run_at=2024-02-17 11:19:37 UTC, transform_id=2, created_by_id=1)

Inspect data flow:

artifact = ln.Artifact.filter(description="hits from schmidt22 crispra GWS").one()

artifact.view_lineage()

Sequencer upload  #

#

Upload files from sequencer:

def run_upload_from_sequencer_pipeline():

ln.setup.login("testuser1")

# create a pipeline transform

ln.track(ln.Transform(name="Chromium 10x upload", type="pipeline"))

# register output files of the sequencer

upload_dir = ln.dev.datasets.dir_scrnaseq_cellranger(

"perturbseq", basedir=ln.settings.storage, output_only=False

)

ln.Artifact(upload_dir.parent / "fastq/perturbseq_R1_001.fastq.gz").save()

ln.Artifact(upload_dir.parent / "fastq/perturbseq_R2_001.fastq.gz").save()

run_upload_from_sequencer_pipeline()

Show code cell output

💡 saved: Transform(uid='LdIroxuej8fsPNCU', name='Chromium 10x upload', type='pipeline', updated_at=2024-02-17 11:19:39 UTC, created_by_id=1)

💡 saved: Run(uid='12qHcH7rEAWGMrTmImh9', run_at=2024-02-17 11:19:39 UTC, transform_id=3, created_by_id=1)

scRNA-seq bioinformatics pipeline  #

#

Process uploaded files using a script or workflow manager: Pipelines and obtain 3 output files in a directory filtered_feature_bc_matrix/:

def run_scrna_analysis_pipeline():

ln.setup.login("testuser2")

transform = ln.Transform(name="Cell Ranger", version="7.2.0", type="pipeline")

ln.track(transform)

# access uploaded files as inputs for the pipeline

input_artifacts = ln.Artifact.filter(key__startswith="fastq/perturbseq").all()

input_paths = [artifact.stage() for artifact in input_artifacts]

# register output files

output_artifacts = ln.Artifact.from_dir(

"./mydata/perturbseq/filtered_feature_bc_matrix/"

)

ln.save(output_artifacts)

# Post-process these 3 files

transform = ln.Transform(

name="Postprocess Cell Ranger", version="2.0", type="pipeline"

)

ln.track(transform)

input_artifacts = [f.stage() for f in output_artifacts]

output_path = ln.dev.datasets.schmidt22_perturbseq(basedir=ln.settings.storage)

output_file = ln.Artifact(output_path, description="perturbseq counts")

output_file.save()

run_scrna_analysis_pipeline()

Show code cell output

💡 saved: Transform(uid='XBXZwUEzwJwfq1tI', name='Cell Ranger', version='7.2.0', type='pipeline', updated_at=2024-02-17 11:19:40 UTC, created_by_id=1)

💡 saved: Run(uid='7pDgs4enKdEnyd0yZ3MT', run_at=2024-02-17 11:19:40 UTC, transform_id=4, created_by_id=1)

❗ this creates one artifact per file in the directory - you might simply call ln.Artifact(dir) to get one artifact for the entire directory

💡 saved: Transform(uid='wzVm5MFDBL0pGRiJ', name='Postprocess Cell Ranger', version='2.0', type='pipeline', updated_at=2024-02-17 11:19:41 UTC, created_by_id=1)

💡 saved: Run(uid='ms3IYTSQPrkquaJdNy91', run_at=2024-02-17 11:19:41 UTC, transform_id=5, created_by_id=1)

Inspect data flow:

output_file = ln.Artifact.filter(description="perturbseq counts").one()

output_file.view_lineage()

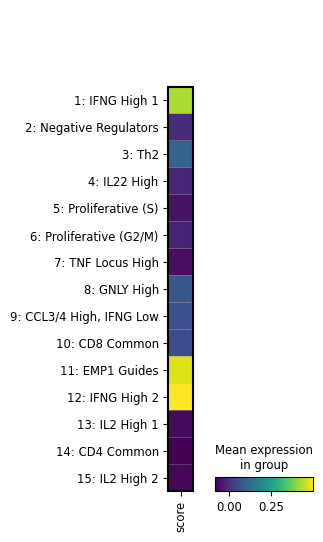

Integrate scRNA-seq & phenotypic data  #

#

Integrate data in a notebook:

def run_integrated_analysis_notebook():

import scanpy as sc

# create a new transform to mimic a new notebook (in reality you just run ln.track() in a notebook)

transform = ln.Transform(

name="Perform single cell analysis, integrate with CRISPRa screen",

type="notebook",

)

ln.track(transform)

# access the output files of bfx pipeline and previous analysis

file_ps = ln.Artifact.filter(description__icontains="perturbseq").one()

adata = file_ps.load()

file_hits = ln.Artifact.filter(description="hits from schmidt22 crispra GWS").one()

screen_hits = file_hits.load()

# perform analysis and register output plot files

sc.tl.score_genes(adata, adata.var_names.intersection(screen_hits.index).tolist())

filesuffix = "_fig1_score-wgs-hits.png"

sc.pl.umap(adata, color="score", show=False, save=filesuffix)

filepath = f"figures/umap{filesuffix}"

artifact = ln.Artifact(filepath, key=filepath)

artifact.save()

filesuffix = "fig2_score-wgs-hits-per-cluster.png"

sc.pl.matrixplot(

adata, groupby="cluster_name", var_names=["score"], show=False, save=filesuffix

)

filepath = f"figures/matrixplot_{filesuffix}"

artifact = ln.Artifact(filepath, key=filepath)

artifact.save()

run_integrated_analysis_notebook()

Show code cell output

💡 saved: Transform(uid='K2O4YD2BB3fyf0lI', name='Perform single cell analysis, integrate with CRISPRa screen', type='notebook', updated_at=2024-02-17 11:19:45 UTC, created_by_id=1)

💡 saved: Run(uid='qFEN3BlQqtaC7akJnDK3', run_at=2024-02-17 11:19:45 UTC, transform_id=6, created_by_id=1)

WARNING: saving figure to file figures/umap_fig1_score-wgs-hits.png

WARNING: saving figure to file figures/matrixplot_fig2_score-wgs-hits-per-cluster.png

Review results#

Let’s load one of the plots:

# track the current notebook as transform

ln.track()

artifact = ln.Artifact.filter(key__contains="figures/matrixplot").one()

artifact.stage()

Show code cell output

💡 notebook imports: ipython==8.21.0 lamindb==0.67.3 scanpy==1.9.8

💡 saved: Transform(uid='1LCd8kco9lZU6K79', name='Project flow', short_name='project-flow', version='0', type=notebook, updated_at=2024-02-17 11:19:46 UTC, created_by_id=1)

💡 saved: Run(uid='KmUOyB6AkvqqIx6FwSZG', run_at=2024-02-17 11:19:46 UTC, transform_id=7, created_by_id=1)

PosixUPath('/home/runner/work/lamin-usecases/lamin-usecases/docs/mydata/.lamindb/tf2zMB2JQ5OqStBvDFtl.png')

display(Image(filename=artifact.path))

We see that the image artifact is tracked as an input of the current notebook. The input is highlighted, the notebook follows at the bottom:

artifact.view_lineage()

Alternatively, we can also look at the sequence of transforms:

transform = ln.Transform.search("Bird's eye view", return_queryset=True).first()

transform.parents.df()

| uid | name | short_name | version | type | latest_report_id | source_code_id | reference | reference_type | created_at | updated_at | created_by_id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| id | ||||||||||||

| 4 | XBXZwUEzwJwfq1tI | Cell Ranger | None | 7.2.0 | pipeline | None | None | None | None | 2024-02-17 11:19:40.558322+00:00 | 2024-02-17 11:19:40.558341+00:00 | 1 |

transform.view_parents()

Understand runs#

We tracked pipeline and notebook runs through run_context, which stores a Transform and a Run record as a global context.

Artifact objects are the inputs and outputs of runs.

What if I don’t want a global context?

Sometimes, we don’t want to create a global run context but manually pass a run when creating an artifact:

run = ln.Run(transform=transform)

ln.Artifact(filepath, run=run)

When does an artifact appear as a run input?

When accessing an artifact via stage(), load() or backed(), two things happen:

The current run gets added to

artifact.input_ofThe transform of that artifact gets added as a parent of the current transform

You can then switch off auto-tracking of run inputs if you set ln.settings.track_run_inputs = False: Can I disable tracking run inputs?

You can also track run inputs on a case by case basis via is_run_input=True, e.g., here:

artifact.load(is_run_input=True)

Query by provenance#

We can query or search for the notebook that created the artifact:

transform = ln.Transform.search("GWS CRIPSRa analysis", return_queryset=True).first()

And then find all the artifacts created by that notebook:

ln.Artifact.filter(transform=transform).df()

| uid | storage_id | key | suffix | accessor | description | version | size | hash | hash_type | n_objects | n_observations | transform_id | run_id | visibility | key_is_virtual | created_at | updated_at | created_by_id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| id | |||||||||||||||||||

| 2 | nbu8fUvTfetpoeu7WDcY | 1 | None | .parquet | DataFrame | hits from schmidt22 crispra GWS | None | 18368 | Lrl1RWvFXNPR6s-hTFcVNA | md5 | None | None | 2 | 2 | 1 | True | 2024-02-17 11:19:38.170714+00:00 | 2024-02-17 11:19:38.170740+00:00 | 1 |

Which transform ingested a given artifact?

artifact = ln.Artifact.filter().first()

artifact.transform

Transform(uid='fzNOgdQYemP5bbfz', name='Upload GWS CRISPRa result', type='app', updated_at=2024-02-17 11:19:35 UTC, created_by_id=1)

And which user?

artifact.created_by

User(uid='DzTjkKse', handle='testuser1', name='Test User1', updated_at=2024-02-17 11:19:39 UTC)

Which transforms were created by a given user?

users = ln.User.lookup()

ln.Transform.filter(created_by=users.testuser2).df()

| uid | name | short_name | version | type | reference | reference_type | created_at | updated_at | latest_report_id | source_code_id | created_by_id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| id |

Which notebooks were created by a given user?

ln.Transform.filter(created_by=users.testuser2, type="notebook").df()

| uid | name | short_name | version | type | reference | reference_type | created_at | updated_at | latest_report_id | source_code_id | created_by_id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| id |

We can also view all recent additions to the entire database:

ln.view()

Show code cell output

Artifact

| uid | storage_id | key | suffix | accessor | description | version | size | hash | hash_type | n_objects | n_observations | transform_id | run_id | visibility | key_is_virtual | created_at | updated_at | created_by_id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| id | |||||||||||||||||||

| 10 | tf2zMB2JQ5OqStBvDFtl | 1 | figures/matrixplot_fig2_score-wgs-hits-per-clu... | .png | None | None | None | 28814 | 3ZZrahUWOjhpfAhm5V-BJw | md5 | None | None | 6 | 6 | 1 | True | 2024-02-17 11:19:45.986806+00:00 | 2024-02-17 11:19:45.986828+00:00 | 1 |

| 9 | 5bQzgKdDltZw3He1P3ea | 1 | figures/umap_fig1_score-wgs-hits.png | .png | None | None | None | 118999 | CdbDJlptwDoCY-yCqmXPJw | md5 | None | None | 6 | 6 | 1 | True | 2024-02-17 11:19:45.774582+00:00 | 2024-02-17 11:19:45.774608+00:00 | 1 |

| 8 | zvdcZmurpn4GJo6iNQbi | 1 | schmidt22_perturbseq.h5ad | .h5ad | AnnData | perturbseq counts | None | 20659936 | la7EvqEUMDlug9-rpw-udA | md5 | None | None | 5 | 5 | 1 | False | 2024-02-17 11:19:44.171112+00:00 | 2024-02-17 11:19:44.171142+00:00 | 1 |

| 7 | F2CMFjPN3wHkzcB8dNSb | 1 | perturbseq/filtered_feature_bc_matrix/features... | .tsv.gz | None | None | None | 6 | 4XZ8nQXNg13SCs1Mb1YW2Q | md5 | None | None | 4 | 4 | 1 | False | 2024-02-17 11:19:40.992034+00:00 | 2024-02-17 11:19:40.992051+00:00 | 1 |

| 6 | FdRpZ6KTDt2syBrNacxW | 1 | perturbseq/filtered_feature_bc_matrix/matrix.m... | .mtx.gz | None | None | None | 6 | iS6RdbtztWGppBSPqGl6mg | md5 | None | None | 4 | 4 | 1 | False | 2024-02-17 11:19:40.991422+00:00 | 2024-02-17 11:19:40.991445+00:00 | 1 |

| 5 | 5qIXSHItnxfS0LI53qhX | 1 | perturbseq/filtered_feature_bc_matrix/barcodes... | .tsv.gz | None | None | None | 6 | HdCm7m8It_j7C7tlHc0EqQ | md5 | None | None | 4 | 4 | 1 | False | 2024-02-17 11:19:40.990705+00:00 | 2024-02-17 11:19:40.990724+00:00 | 1 |

| 4 | nOIkLuKMZopLl8W9KxGV | 1 | fastq/perturbseq_R2_001.fastq.gz | .fastq.gz | None | None | None | 6 | rK5rFx5Y8as7WRzL63IP7w | md5 | None | None | 3 | 3 | 1 | False | 2024-02-17 11:19:39.587089+00:00 | 2024-02-17 11:19:39.587108+00:00 | 1 |

Run

| uid | transform_id | run_at | created_by_id | report_id | environment_id | is_consecutive | reference | reference_type | created_at | |

|---|---|---|---|---|---|---|---|---|---|---|

| id | ||||||||||

| 1 | nW3MBgnRS9k7d54hTbRU | 1 | 2024-02-17 11:19:35.444201+00:00 | 1 | None | None | None | None | None | 2024-02-17 11:19:35.444283+00:00 |

| 2 | DYMlIYIkKXTDS6UAFHzQ | 2 | 2024-02-17 11:19:37.698197+00:00 | 1 | None | None | None | None | None | 2024-02-17 11:19:37.698272+00:00 |

| 3 | 12qHcH7rEAWGMrTmImh9 | 3 | 2024-02-17 11:19:39.147665+00:00 | 1 | None | None | None | None | None | 2024-02-17 11:19:39.147779+00:00 |

| 4 | 7pDgs4enKdEnyd0yZ3MT | 4 | 2024-02-17 11:19:40.560856+00:00 | 1 | None | None | None | None | None | 2024-02-17 11:19:40.560931+00:00 |

| 5 | ms3IYTSQPrkquaJdNy91 | 5 | 2024-02-17 11:19:41.002933+00:00 | 1 | None | None | None | None | None | 2024-02-17 11:19:41.003005+00:00 |

| 6 | qFEN3BlQqtaC7akJnDK3 | 6 | 2024-02-17 11:19:45.031984+00:00 | 1 | None | None | None | None | None | 2024-02-17 11:19:45.032115+00:00 |

| 7 | KmUOyB6AkvqqIx6FwSZG | 7 | 2024-02-17 11:19:46.276373+00:00 | 1 | None | None | None | None | None | 2024-02-17 11:19:46.276449+00:00 |

Storage

| uid | root | description | type | region | created_at | updated_at | created_by_id | |

|---|---|---|---|---|---|---|---|---|

| id | ||||||||

| 1 | ZseFAdOD | /home/runner/work/lamin-usecases/lamin-usecase... | None | local | None | 2024-02-17 11:19:33.493674+00:00 | 2024-02-17 11:19:33.493692+00:00 | 1 |

Transform

| uid | name | short_name | version | type | latest_report_id | source_code_id | reference | reference_type | created_at | updated_at | created_by_id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| id | ||||||||||||

| 7 | 1LCd8kco9lZU6K79 | Project flow | project-flow | 0 | notebook | None | None | None | None | 2024-02-17 11:19:46.273820+00:00 | 2024-02-17 11:19:46.273846+00:00 | 1 |

| 6 | K2O4YD2BB3fyf0lI | Perform single cell analysis, integrate with C... | None | None | notebook | None | None | None | None | 2024-02-17 11:19:45.027228+00:00 | 2024-02-17 11:19:45.027257+00:00 | 1 |

| 5 | wzVm5MFDBL0pGRiJ | Postprocess Cell Ranger | None | 2.0 | pipeline | None | None | None | None | 2024-02-17 11:19:41.000430+00:00 | 2024-02-17 11:19:41.000450+00:00 | 1 |

| 4 | XBXZwUEzwJwfq1tI | Cell Ranger | None | 7.2.0 | pipeline | None | None | None | None | 2024-02-17 11:19:40.558322+00:00 | 2024-02-17 11:19:40.558341+00:00 | 1 |

| 3 | LdIroxuej8fsPNCU | Chromium 10x upload | None | None | pipeline | None | None | None | None | 2024-02-17 11:19:39.144970+00:00 | 2024-02-17 11:19:39.144990+00:00 | 1 |

| 2 | KMXWMNPjyZp8Au7e | GWS CRIPSRa analysis | None | None | notebook | None | None | None | None | 2024-02-17 11:19:37.693926+00:00 | 2024-02-17 11:19:37.693949+00:00 | 1 |

| 1 | fzNOgdQYemP5bbfz | Upload GWS CRISPRa result | None | None | app | None | None | None | None | 2024-02-17 11:19:35.441595+00:00 | 2024-02-17 11:19:35.441615+00:00 | 1 |

User

| uid | handle | name | created_at | updated_at | |

|---|---|---|---|---|---|

| id | |||||

| 2 | bKeW4T6E | testuser2 | Test User2 | 2024-02-17 11:19:37.686363+00:00 | 2024-02-17 11:19:40.550595+00:00 |

| 1 | DzTjkKse | testuser1 | Test User1 | 2024-02-17 11:19:33.490510+00:00 | 2024-02-17 11:19:39.137111+00:00 |

Show code cell content

!lamin login testuser1

!lamin delete --force mydata

!rm -r ./mydata

✅ logged in with email testuser1@lamin.ai (uid: DzTjkKse)

💡 deleting instance testuser1/mydata

✅ deleted instance settings file: /home/runner/.lamin/instance--testuser1--mydata.env

✅ instance cache deleted

✅ deleted '.lndb' sqlite file

❗ consider manually deleting your stored data: /home/runner/work/lamin-usecases/lamin-usecases/docs/mydata